HPC/Submitting and Managing Jobs/Example Job Script: Difference between revisions

| Line 123: | Line 123: | ||

import os | import os | ||

'''os.chdir(os.environ['PBS_O_WORKDIR'])''' | if os.environ.has_key('PBS_O_WORKDIR'): | ||

'''os.chdir(os.environ['PBS_O_WORKDIR'])''' | |||

… | … | ||

'''Important:''' The job script only ever runs in serial, on one core. Any parallelization must be initiated by leveraging MPI or OpenMP explicitly (e.g. http://mpi4py.scipy.org) or implicitly by calling a child application that runs in parallel (e.g. VASP). Such a child script or application either needs to be aware of or be told to use <code>$PBS_NODEFILE</code>. | '''Important:''' The job script only ever runs in serial, on one core. Any parallelization must be initiated by leveraging MPI or OpenMP explicitly (e.g. http://mpi4py.scipy.org) or implicitly by calling a child application that runs in parallel (e.g. VASP). Such a child script or application either needs to be aware of or be told to use <code>$PBS_NODEFILE</code>. | ||

Revision as of 22:19, November 12, 2012

Introduction

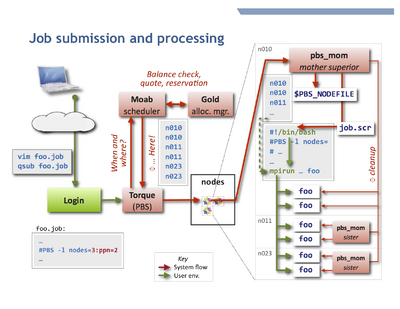

A Torque job script is usually a shell script that begins with PBS directives. Directives are comment lines to the shell but are interpreted by Torque, and take the form

#PBS qsub_options

The job script is read by the qsub job submission program, which interpretes the directives and accordingly places the job in the queue. Review the qsub man page to learn about the options accepted and also the environment variables provided to the job script later at execution.

- Note

- Place directives only at the beginning of the job script. Torque ignores directives after the first executable statement in the script. Empty lines are allowed, but not recommended. Best practice is to have a single block of directives at the beginning of the file.

- Advanced usage

- The job script may be written in any scripting language, such as Perl or Python. The interpreter is specified in the first script line in Unix hash-bang syntax

#!/usr/bin/perl, or using the qsub -S path_list option. - The default directive token

#PBScan be changed or unset entirely with the qsub -C option; see qsub, sec. Extended Description.

Application-specific job scripts

For most scalar and MPI-based parallel jobs on Carbon the scripts in the next section will be appropriate.

Some applications however require customizations,

typically copying $TMPDIR to an app-specific variable,

or calling MPI through app-specifc wrapper scripts.

Such application-specific custom scripts are located at the root directory of the application under /opt/soft,

and can typically be reached as:

$APPNAME_HOME/APPNAME.job

or

$APPNAME_HOME/sample.job

where APPNAME is the module name all UPPERCASED and with "-" (minus) characters replaced by "_" (underscore).

To find variables of this form:

env | grep _HOME

Generic job scripts

Here are a few example jobs for the most common tasks. Note how the PBS directives are mostly independent from the type of job, except for the node specification.

OpenMPI, InfiniBand

This is the default user environment. The openmpi and icc, ifort, mkl modules are preloaded in the system's shell startup files.

The InfiniBand fast interconnect is selected in the openmpi module by means of the environment variable $OMPI_MCA_btl.

#!/bin/bash

#

# Basics: Number of nodes, processors per node (ppn), and walltime (hhh:mm:ss)

#PBS -l nodes=5:ppn=8

#PBS -l walltime=0:10:00

#PBS -N job_name

#PBS -A account

#

# File names for stdout and stderr. If not set here, the defaults

# are <JOBNAME>.o<JOBNUM> and <JOBNAME>.e<JOBNUM>

#PBS -o job.out

#PBS -e job.err

#

# Send mail at begin, end, abort, or never (b, e, a, n). Default is "a".

#PBS -m ea

# change into the directory where qsub will be executed

cd $PBS_O_WORKDIR

# start MPI job over default interconnect; count allocated cores on the fly.

mpirun -machinefile $PBS_NODEFILE -np $PBS_NP \

programname

- Updated 2012-07: Beginning with Torque-3.0,

$PBS_NPcan be used instead of the former construct$(wc -l < $PBS_NODEFILE).

- If your program reads from files or takes options and/or arguments, use and adjust one of the following forms:

mpirun -machinefile $PBS_NODEFILE -np $PBS_NP \

programname < run.in

mpirun -machinefile $PBS_NODEFILE -np $PBS_NP \

programname -options arguments < run.in

mpirun -machinefile $PBS_NODEFILE -np $PBS_NP \

programname < run.in > run.out 2> run.err

- In the last form, anything after

programnameis optional. If you use specific redirections for stdout or stderr as shown (>, 2>), the job-global filesjob.out, job.errdeclared earlier will remain empty or only contain output from your shell startup files (which should really be silent), and the rest of your job script.

OpenMPI, Ethernet

To select ethernet transport, such as for embarrasingly parallel jobs, specify an -mca option:

mpirun -machinefile $PBS_NODEFILE -np $PBS_NP \

-mca btl self,tcp \

programname

Intel-MPI

Under Intel-MPI the job script will be:

#!/bin/bash

#PBS ... (same as above)

cd $PBS_O_WORKDIR

mpiexec.hydra -machinefile $PBS_NODEFILE -np $PBS_NP \

programname

GPU nodes

As of late 2012, Carbon has 28 nodes with 1 NVIDIA C2075 GPU each. To specifically request GPU nodes, add :gpus=1 to the nodes request:

#PBS -l nodes=N:ppn=PPN:gpus=1

- The

gpus=…modifier refers to GPUs per node and currently must always be 1. - GPU support depends on the application, in particular if several MPI processes or OpenMP threads can share a GPU. Test and optimize the

NandPPNparameters for your situation. Start withnodes=1:ppn=4.

Other scripting languages

The job scripts shown above are written in bash, a Unix command shell language.

Actually, qsub accepts scripts in pretty much any scripting language.

The only pieces that Torque reads are the Torque directives, up to the first script command, i.e., a line which is (a) not empty, (b) not a PBS directive, and (c) not a "#"-style comment.

The script interpreter is chosen by the Linux kernel from the first line of the script.

As with any job, the script execution normally begins in the home directory, so one of the first commands in the script should be the equivalent of cd $PBS_O_WORKDIR.

For instance, a job script in Python would typically begin like this:

#!/usr/bin/python

#PBS -l nodes=N:ppn=PPN

#PBS -l walltime=hh:mm:ss

#PBS -N job_name

#PBS -A account

#PBS -o filename

#PBS -j oe

#PBS -m ea

import os

if os.environ.has_key('PBS_O_WORKDIR'):

os.chdir(os.environ['PBS_O_WORKDIR'])

…

Important: The job script only ever runs in serial, on one core. Any parallelization must be initiated by leveraging MPI or OpenMP explicitly (e.g. http://mpi4py.scipy.org) or implicitly by calling a child application that runs in parallel (e.g. VASP). Such a child script or application either needs to be aware of or be told to use $PBS_NODEFILE.

This is not to be confused with using a "regular" bash script and mpirun to start several custom python interpreters in parallel, which is how GPAW and ATK operate:

#!/bin/bash … mpirun -machinefile $PBS_NODEFILE -np $PBS_NP \ gpaw-python script.py

#!/bin/bash … mpiexec.hydra -machinefile $PBS_NODEFILE -np $PBS_NP \ atkpython script.py

The account parameter

The parameter for option -A account can take the following forms:

cnm23456- for most jobs, containing your 5-digit proposal number.

user- (the actual string "user", not your user name) for a limited personal startup allocation.

staff- for discretionary access by CNM staff.

You can check your account balance in hours as follows:

mybalance -h

gbalance -u $USER -h

- The relevant column is

Available, accounting for amounts reserved by current jobs and credits.

Timed Allocations

The compute time physically available by Carbon's processors is a perishable resource. Hence, your allocations are time-restricted in a use-it-or-lose-it manner. This is done to encourage consistent use of the machine throughout allocation cycles.

The current scheme is as follows:

- Your allocation is provided in three equal-sized installments.

- All installments are active from the beginning.

- Installments expire in a staggered fashion, currently after 4, 8, and 12 months, respectively. A diagram might illuminate this:

proposal proposal Key

start end

- One month on the time axis

|------------|------> Time # Installment is active

| . Installment is inactive

1 |####............

|

2 |########........

|

3 |############....

|

v

Installment

number

- Your jobs will, sensibly, be booked against the installments that expire the earliest.

Advanced node selection

You can refine the node selection (normally done via the PBS resource -l nodes=…) to

finely control your node and core allocations. You may need to do so for the following reasons:

- select specific node hardware generations,

- permit shared vs. exclusive node access,

- vary PPN across the nodes of a job,

- accommodate multithreading (OpenMP).

See HPC/Submitting Jobs/Advanced node selection for these topics.