HPC/Submitting and Managing Jobs/Example Job Script

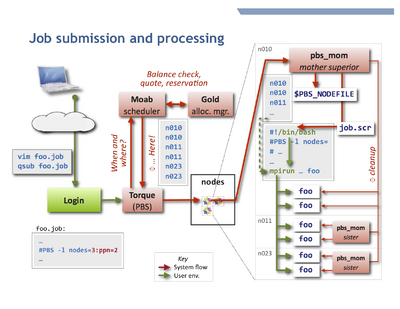

A Torque job script is usually a shell script that begins with PBS directives. These are comment lines to the shell that are interpreted by Torque, in the form

#PBS qsub_options

- Note

- Torque ignores directives after the first executable statement in the script. Place directives at the beginning of the job script. Empty lines are allowed, but not recommended. Best practice is to have a single comment-block at the beginning of the file.

- Advanced usage

- The job script may be written in any scripting language, such as Perl or Python. The interpreter is specified in the first script line in Unix hash-bang syntax

#!/usr/bin/perl, or using the qsub -S path_list option. - The default directive token

#PBScan be changed or unset entirely with the qsub -C option; see qsub, sec. Extended Description.

Example job file

Here are a few example jobs for the most common tasks. Note how the PBS directives are mostly independent from the type of job, except for the node specification.

OpenMPI, InfiniBand

This is the default user environment. The openmpi and icc, ifort, mkl modules are loaded by the system.

#!/bin/bash

#

# Basics: Number of nodes, processors per node (ppn), and walltime (hhh:mm:ss)

#PBS -l nodes=5:ppn=8

#PBS -l walltime=0:10:00

#PBS -N job_name

#PBS -A account

#

# File names for stdout and stderr. If not set here, the defaults

# are <JOBNAME>.o<JOBNUM> and <JOBNAME>.e<JOBNUM>

#PBS -o job.out

#PBS -e job.err

#

# Send mail at begin, end, abort, or never (b, e, a, n). Default is "a".

#PBS -m ea

# change into the directory where qsub will be executed

cd $PBS_O_WORKDIR

# count allocated cores

nprocs=$(wc -l < $PBS_NODEFILE)

# start MPI job over default interconnect

mpirun -machinefile $PBS_NODEFILE -np $nprocs \

programname

- If your program reads from files or takes options and/or arguments, use and adjust one of the following forms:

mpirun -machinefile $PBS_NODEFILE -np $nprocs \

programname < run.in

mpirun -machinefile $PBS_NODEFILE -np $nprocs \

programname -options arguments < run.in

mpirun -machinefile $PBS_NODEFILE -np $nprocs \

programname < run.in > run.out 2> run.err

- In the last form, anything after

programnameis optional. If you use specific redirections for stdout or stderr as shown (>, 2>), the job-global filesjob.out, job.errdeclared earlier will remain empty or only contain output from your shell startup files (which should really be silent), and the rest of your job script.

- Infiniband (OpenIB) is the default (and fast) interconnect mechanism for MPI jobs. This is configured through the environment variable

$OMPI_MCA_btl. - To select ethernet transport (e.g. for embarrasingly parallel jobs), specify an

-mcaoption:

mpirun -machinefile $PBS_NODEFILE -np $NPROCS \

-mca btl self,tcp \

programname

Intel-MPI

Intel-MPI is based on MPICH2. It supports both the older MPD (not recommended) and the newer Hydra process manager.

module load impi

Then the job script will be:

#!/bin/bash

#PBS ... (same as above)

cd $PBS_O_WORKDIR

mpiexec.hydra \

-machinefile $PBS_NODEFILE \

-np $(wc -l < $PBS_NODEFILE) \

programname

The account parameter

The parameter for option -A account is in most cases the CNM proposal, specified as follows:

cnm123- (3 digits) for proposals below 1000

cnm01234- (5 digits, 0-padded) for proposals from 1000 onwards.

user- (the actual string "user", not your user name) for a limited personal startup allocation

staff- for discretionary access by staff.

You can check your account balance in hours as follows:

mybalance -h

gbalance -u $USER -h

Advanced node selection

You can refine the node selection (normally done via the PBS resource -l nodes=…) to

finely control your node and core allocations. You may need to do so for the following reasons:

- select specific node hardware generations,

- permit shared vs. exclusive node access,

- vary PPN across the nodes of a job,

- accommodate multithreading (OpenMP).

See HPC/Submitting Jobs/Advanced node selection for these topics.