HPC/Applications/lumerical: Difference between revisions

| Line 94: | Line 94: | ||

* Push Edit. | * Push Edit. | ||

* Enter the advanced options as shown: | * Enter the advanced options as shown: | ||

*: [[Image:HPC 2014-04-28 Lumerical optimizations 3.png]] | |||

<pre> | <pre> | ||

Job launching: Standard | Job launching: Standard | ||

| Line 106: | Line 107: | ||

Set FDTD engine: (uncheck) | Set FDTD engine: (uncheck) | ||

</pre> | </pre> | ||

* Push OK. The window will close. | * Push OK. The window will close. | ||

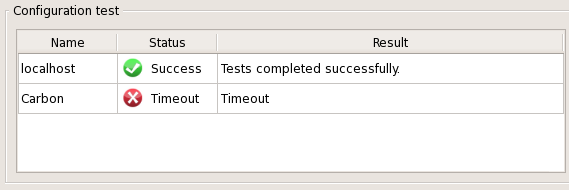

* In the toplevel Resource Configuration window you may want to push "Run tests". This may work and you get "MPICH tests completed successfully". Likely, this will turn into "Timeout" shortly afterwards. | * In the toplevel Resource Configuration window you may want to push "Run tests". This may work and you get "MPICH tests completed successfully". Likely, this will turn into "Timeout" shortly afterwards. | ||

Revision as of 21:49, April 28, 2014

Introduction

Lumerical has two main components:

- CAD: The GUI. We have licensed 2 concurrent seats.

- FDTD: The compute engine, called either by the GUI or in a PBS job file. We have 2 + 10 concurrent seats.

Manual and Knowledge Base

http://docs.lumerical.com/en/fdtd/knowledge_base.html

Running compute jobs (FDTD)

To run a presumably parallel job, save your input file in CAD as .fsp file. Then copy and customize the following job template, entering your account and file names:

$LUMERICAL_HOME/sample.job

Note: FDTD does not support checkpointing (thanks to Julian S. for checking). Select your #PBS -l walltime parameter generously.

Memory issues

- Problem

When running an FDTD compute job in parallel, during the final stage (Data Collection) the memory use of the MPI master process could dramatically increase. For example, during the calculation ("cruising") stage each MPI process may be happy with using 2-4 GB, but in the collection stage, the master process (MPI rank 0) may require 10 times as much and more. The other MPI processes evidently remain idle but running during the collection stage, and continue to hold on to their memory, which could amount to a valuable 10–15 GB, say. The increased memory demand may cause the node to go into swap, even for Carbon's "bigmem" nodes which have 48 GB RAM each. Swap use will be detected and at first tolerated by TORQUE (PBS), but after a few minutes TORQUE will kill the job. In that case, the following error will appear in the standard error stream:

=>> PBS: job killed: swap rate due to memory oversubscription is too high mpirun: abort is already in progress...hit ctrl-c again to forcibly terminate mpirun: killing job...

The standard output stream may reach 99% or 100%, then stop:

0% complete. Max time remaining: 16 mins, 4 secs. Auto Shutoff: 1 1% complete. Max time remaining: 15 mins, 4 secs. Auto Shutoff: 1 … 98% complete. Max time remaining: 20 secs. Auto Shutoff: 6.21184e-05 99% complete. Max time remaining: 9 secs. Auto Shutoff: 5.5162e-05 100% complete. Max time remaining: 0 secs. Auto Shutoff: 4.51006e-05

- For success, there should rather be several more lines with collection notes and of course "Simulation completed successfully".

- Workarounds

- Try to collect less data.

Chris K. from Lumerical Support wrote:

For example, all field monitors allow you to choose which E/H/P fields to collect. If you only care about power transmission through a monitor, you can disable all the E/H/P fields and just collect the 'net power'. You can also control the number of frequency points, and you can enable spatial downsampling, and obviously just make the monitors smaller.

- Have the master process run on a node of its own, as shown at HPC/Submitting and Managing Jobs/Advanced node selection#Different PPN by node. You will trade away compute capacity for memory. Caveat: Try to keep the total number of cores highly divisible. Example:

- A typical request for 64 cores:

#PBS -l nodes=8:ppn=8

- Improved request:

#PBS -l nodes=1:ppn=1:bigmem+1:ppn=7+7:ppn=8 #PBS -l naccesspolicy=SINGLEJOB -n

Note that the + sign is a field delimiter in the "nodes" specification.

This specification requests the same number of cores total, but split up the load of the original first node over two nodes, one with a single core, and the second with the remaining 7 cores, followed by as many 8-core nodes as needed.

nodes = ( 1:ppn=1 ) + ( 1:ppn=7 ) + ( 7:ppn=8 ) = 1 + 7 + 7 * 8 = 64 cores.

Rank 0 will have the entire RAM on the first node available, and is the only rank to likely need "bigmem". The other ranks are modest in memory needs and unlikely to face contention. Usually, Moab will ensure that all ranks run on the same node generation, in this case gen2 (see HPC/Submitting and Managing Jobs/Advanced node selection#Hardware).

Running the GUI (CAD)

There are three means to access Carbon's Lumerical licenses:

- Run on Carbon and display on your machine over X11 forwarding

- Run on Carbon in a VNC virtual desktop and display that desktop on your machine.

- Run on your desktop directly and use license port forwarding.

X11

Use the CAD X11 app on a Carbon login node.

CAD &

- Requires X11 forwarding under SSH.

- Disadvantage: May run slow or unstable.

VNC

Use CAD on Carbon in a VNC virtual desktop.

- Requires a VNC client on your side.

- Advantage: uses compression and hence can be faster than X11.

- Disadvantage: Limited desktop environment.

Native

Run CAD natively on your desktop, but connect to the Lumerical license server on Carbon.

- Advantage: Native graphics speed.

- Caveat: needs an active network connection to a Carbon login node.

Running Optimization Jobs

- Prepare your optimization project as needed, save it as *.fsp file, and if needed opy it onto Carbon.

- Open CAD on Carbon.

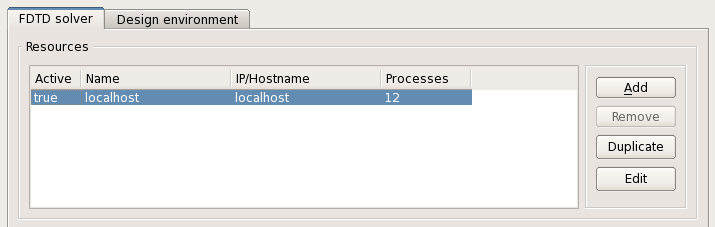

- Click Configure resources under the Simulation menu entry.

- Remove all but the "localhost" entry.

- Push Add.

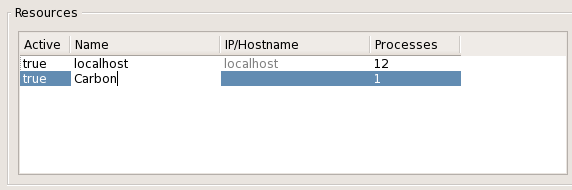

- Double-click the Name column of the new resource entry, enter "Carbon", and press enter.

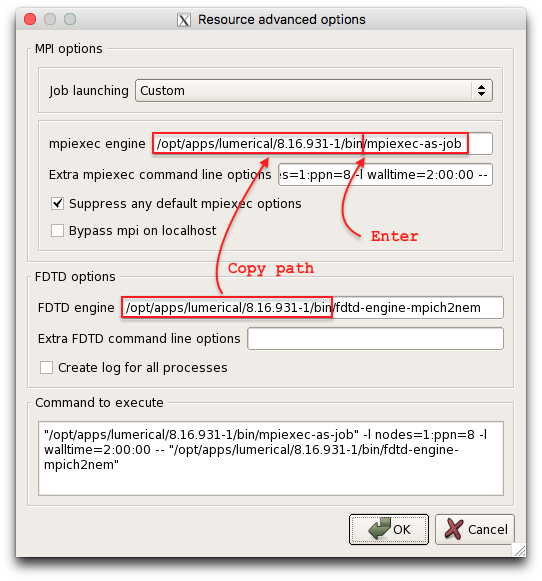

- Push Edit.

- Enter the advanced options as shown:

Job launching: Standard Use Processor binding …: (uncheck) Extra mpiexec command line options: either leave empty or enter qsub options followed by "--", e.g.: as follows -l nodes=1:ppn=8 -l walltime=2:00:00 -- Suppress any default mpiexec options: (check) Set mpiexec engine: (check) mpiexec-as-job Create log of all processes: (uncheck) Extra FDTD … options: (leave empty) Set FDTD engine: (uncheck)

- Push OK. The window will close.

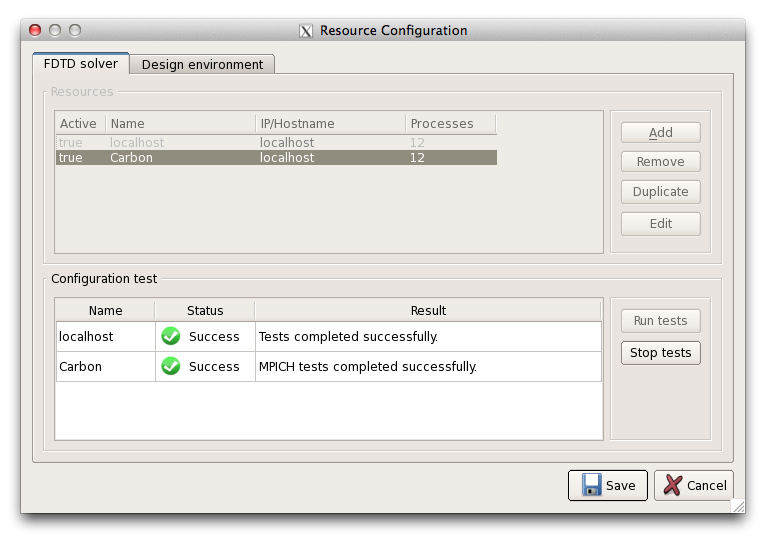

- In the toplevel Resource Configuration window you may want to push "Run tests". This may work and you get "MPICH tests completed successfully". Likely, this will turn into "Timeout" shortly afterwards.

- Duplicate the "Carbon" resource about 5-8 times.

- Push Save.

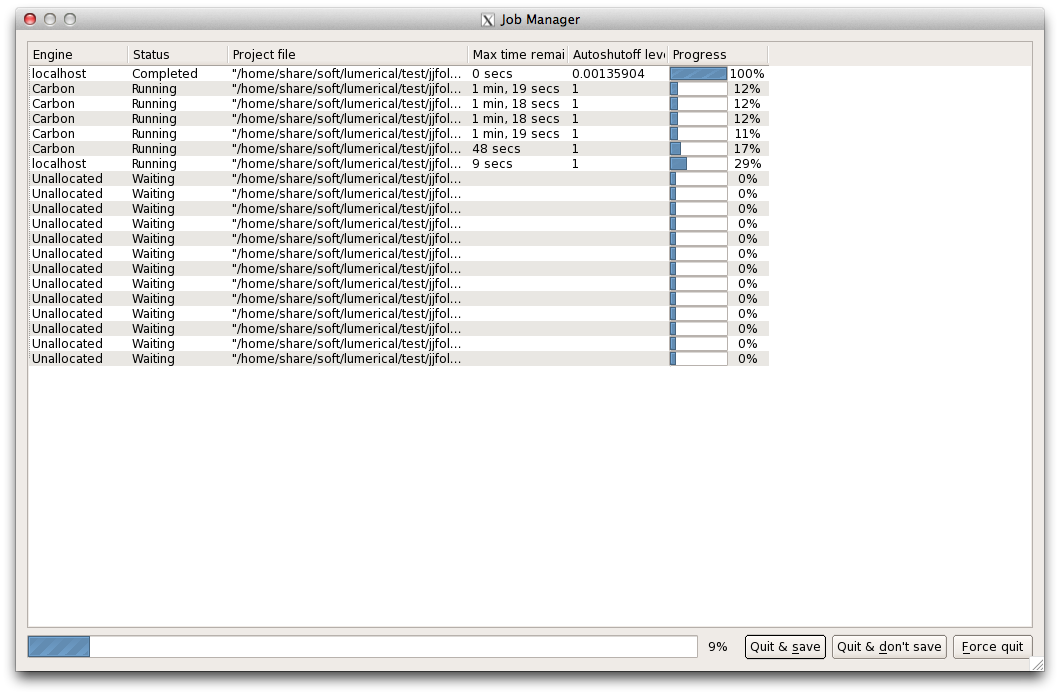

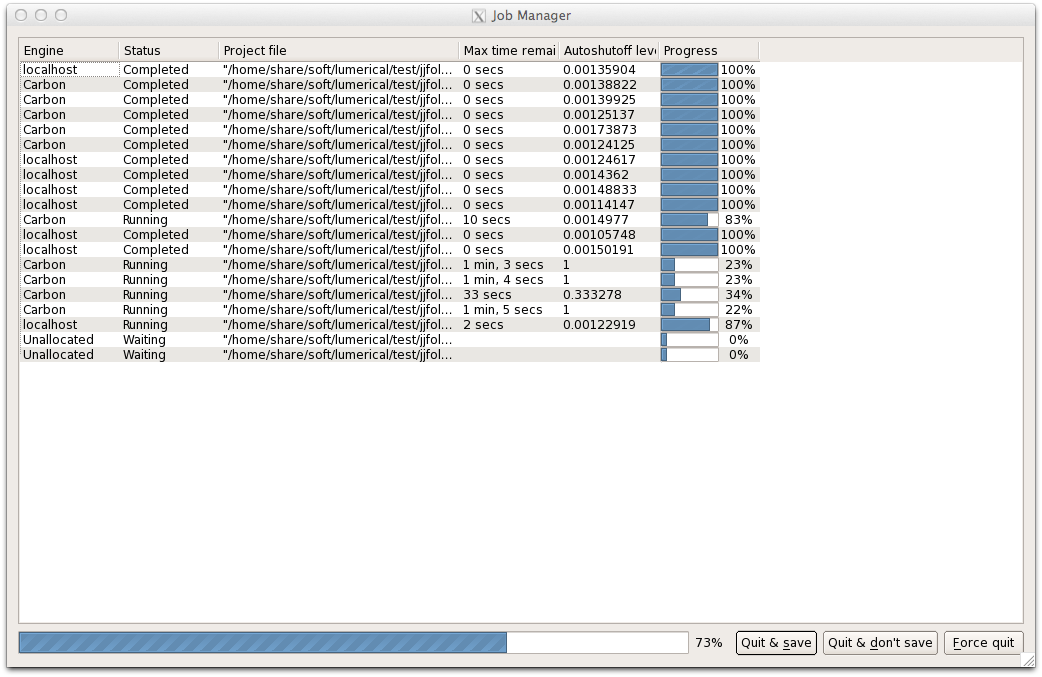

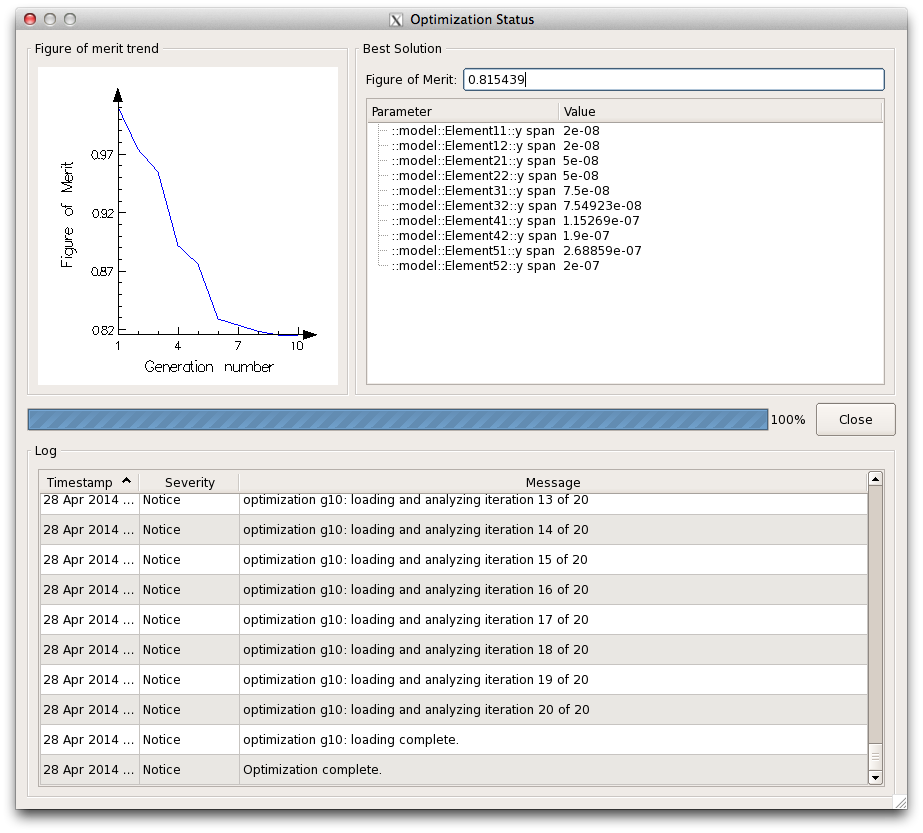

- You are ready to run the optimization. You'll get windows like the following. There will be several volleys, each producing a "swarm" point in the figure-of-merit trend plot.

- Hints

- You may go back and edit the Advanced options of a resource, but you must remove all other previously cloned entries, and re-clone the newly edited resource.

- When you choose to provide qsub options under "Extra mpiexec command line options", such as to allow for a walltime longer than the default 1 hour, ensure to append "--".

Remote license access

To use #Native access, your desktop copy of Lumerical must be configured to connect to Carbon's license server.

The license mechanism has recently changed.

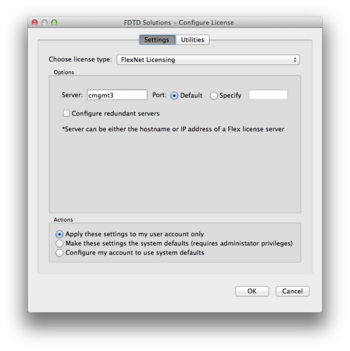

FlexNet Licensing

This is a mechanism [1] in use for Lumerical version 8.6 onwards. To use your Lumerical desktop version, follow these steps:

When inside CNM or using VPN – does not apply to Carbon

- Verify that you are inside CNM's network by browsing to http://carbon/

- In the Lumerical License configuration, Choose license type: FlexNet Licensing

- Set Server to

cmgmt3orcmgmt3.cnm.anl.gov.

Note: This section does not apply for running CAD or FDTD on Carbon itself.

When outside CNM

- Close any connection to Carbon's login nodes.

- Configure port forwarding for your your SSH client (one-time only).

- Mac and Linux users: Add to ~/.ssh/config:

…

Host clogin

LocalForward 27011 mgmt03:27011

LocalForward 27012 mgmt04:27012

LocalForward 27013 sched1:27013

…

- Windows users: See HPC/Network Access/PuTTY Configuration/Accessing Carbon licenses remotely

- Log in to clogin using ssh (each time): This will activate the forwarded ports configured in the previous step.

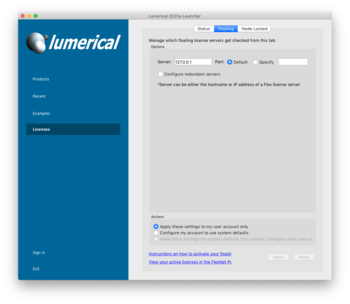

- Install or upgrade Lumerical.: When asked for the license information, choose FlexNet licensing and enter host names and port numbers as shown here:

- Start Lumerical.: To inspect the license setting, choose About Lumerical from the application menu.

The license setting is stored on Mac and Linux platforms in ~/.config/Lumerical/FDTD\ Solutions.ini and should read:

…

[license]

type=flex

flexserver\host=27011@localhost:27012@localhost:27013@localhost

Legacy mechanism - Retired July 2013

This was the license mechanism [2] in use before version 8.6. No license tokens for this mechanism remain active.