Running Your Code: Difference between revisions

| (8 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

= Careful, this chapter is quite outdated and needs to be rewritten = | |||

== Running Jobs == | == Running Jobs == | ||

| Line 12: | Line 15: | ||

module load base | module load base | ||

Feel free to to contact us. [mailto:[email protected] Click to send email] | Feel free to to contact us. [mailto:[email protected] Click to send email] if you need help with Torque or Maui. Note that other documentation you might find regarding Torque and Maui may not apply to the TRACC cluster because of differences in how we've configured the software. | ||

=== Torque's view of the cluster === | === Torque's view of the cluster === | ||

| Line 121: | Line 124: | ||

* To use the nhtsa queue which is not the default queue and has 64 GB nodes: | * To use the nhtsa queue which is not the default queue and has 64 GB nodes: | ||

* To use qsub to submit a 64-core job with a maximum walltime of 10 hours (meaning that the job will be killed after 10 hours have elapsed): | * To use qsub to submit a 64-core job with a maximum walltime of 10 hours (meaning that the job will be killed after 10 hours have elapsed): | ||

'''Steve can you modify the following command to use the nhtsa queue.... Larry''' | |||

qsub -l nodes=2:ppn=32,walltime=10:00:00 jobscript | qsub -l nodes=2:ppn=32,walltime=10:00:00 jobscript | ||

| Line 151: | Line 154: | ||

==== Large memory jobs ==== | ==== Large memory jobs ==== | ||

There are two queues that are available for large memory jobs. | |||

* batch64 queue | |||

** 2 nodes numbered n003 and n004 | |||

** Same design as batch queue | |||

** 64GB of RAM per node | |||

** available for general use | |||

* batch128 queue | |||

** 2 nodes numbered n001 and n002 | |||

** Same design as batch queue | |||

** 128GB of RAM per node | |||

** available for general use | |||

As an example, to submit your jobs to the batch128 queue: | |||

qsub -q | qsub -q batch128 ... | ||

Jobs on the | Jobs on the nodes are limited to 72 hours. | ||

Swap has been disabled on these nodes. | Swap has been disabled on these nodes. | ||

| Line 185: | Line 188: | ||

On Zephyr: | On Zephyr: | ||

batch queue: 168 hours | batch queue: 168 hours | ||

batch64 queue: 72 hours | |||

batch128 queue: 72 hours | |||

On Phoenix: | On Phoenix: | ||

| Line 596: | Line 599: | ||

Only the numerical portion of the job-id needs to be specified. | Only the numerical portion of the job-id needs to be specified. | ||

=== Interactive | === Interactive Jobs === | ||

You can get interactive access to a compute node with Torque by using the -I switch to qsub: | You can get interactive access to a compute node with Torque by using the -I switch to qsub: | ||

| Line 693: | Line 696: | ||

Users cannot directly reserve the cluster, but if you think you might have a need for a reservation, please contact us. | Users cannot directly reserve the cluster, but if you think you might have a need for a reservation, please contact us. | ||

[mailto:[email protected] Click to send email] | [mailto:[email protected] Click to send email] as far in advance as possible. | ||

Latest revision as of 22:14, April 16, 2025

Careful, this chapter is quite outdated and needs to be rewritten

Running Jobs

Please do not run CPU- or memory-intensive tasks on the login nodes; always run them on the compute nodes. Resource-intensive processes running on login nodes may be killed at any time, without warning or notification.

Getting started

Jobs are submitted to the cluster using Torque and scheduled by Maui. Some documentation of the commands that are used by Torque and Maui can be found on the man pages. For example:

man qsub

To use Torque and Maui, you'll need to make sure the base module is loaded in your shell's setup files:

module load base

Feel free to to contact us. Click to send email if you need help with Torque or Maui. Note that other documentation you might find regarding Torque and Maui may not apply to the TRACC cluster because of differences in how we've configured the software.

Torque's view of the cluster

Torque and Maui are both used to schedule and run job for both the arrow and nhtsa queues.

Submitting jobs

Job scripts

The usual way of running a job with Torque is to submit a shell script. For most commercial applications we have wrapper scripts to automatically generate job scripts; see our application notes for our supported software, or contact your sponsor for more info, then skip ahead to information about qsub.

A simple script to run an MPI job would be

#!/bin/bash # PBS directives must precede all executable commands cd $PBS_O_WORKDIR ## HPMPI # mpirun -hostfile $PBS_NODEFILE ./mpi-application ## Intel MPI # mpirun ./mpi-application

If you need to load a module in your shell script, do it like this, for scripts written in /bin/sh or /bin/bash:

. /etc/profile.d/modules.sh module load <some-module>

or like this, if your script is written in /bin/csh or /bin/tcsh:

. /etc/profile.d/modules.csh module load <some-module>

or like this, for Python:

import sys

sys.path.append("/soft/modules/current/init")

import envmodule

envmodule.module("load <some-module>")

or like this, for Perl:

use lib "/soft/modules/current/init";

use envmodule;

module("load <some-module");

For other languages, you can usually achieve the same effect by loading the module in your shell's configuration files (.bashrc or .cshrc).

Torque variables

- Torque sets several variables which you can use in your job scripts. See the qsub man page for the complete list.

- All Torque variables begin with "PBS", as Torque used to be known as OpenPBS (which was the precursor of PBS Pro).

- The most important Torque variables to be aware of are

PBS_O_WORKDIR

which is the working directory at the time of job submission, and

PBS_NODEFILE

which contains a list of the compute nodes which Torque has assigned your job, in the form

n001 n001 n001 n001 n001 n001 n001 n001

with each node listed once for each core.

qsub usage

The command is used to submit jobs to the cluster. qsub's behavior can be customized with command line options or script directives. Note that all script directives must precede all executable commands, so it's best to put all directives at the top of the script file.

For example:

- To use qsub to submit a 32-core job; to the batch queue which is the default queue; with a maximum walltime of 10 hours (meaning that the job will be killed after 10 hours have elapsed):

qsub -l nodes=4:ppn=8,walltime=10:00:00 jobscript

This can be specified in the jobscript with

#PBS -l nodes=4:ppn=8 #PBS -l walltime=10:00:00

in which case the job would be submitted with

qsub jobscript

- The default resource request if one isn't specified on the command line or in the jobscript is

nodes=1:ppn=1 -l walltime=5:00

meaning 1 core on 1 node, with a walltime of 5 minutes.

For example:

- For scientific applications that rely heavily on floating point operations such as STAR-CCM+, LS-DYNA, OpenFOAM, and University CFD research codes, users should specify 16 cores per node ( PPN=16 ), and not 32 since each node has a total of 16 floating point units (FPUs). Specifying 32 cores ( PPN=32 ) per compute node will not provide substantial performance gains, but will use up commerical licenses.

- To use the nhtsa queue which is not the default queue and has 64 GB nodes:

- To use qsub to submit a 64-core job with a maximum walltime of 10 hours (meaning that the job will be killed after 10 hours have elapsed):

Steve can you modify the following command to use the nhtsa queue.... Larry

qsub -l nodes=2:ppn=32,walltime=10:00:00 jobscript

This can be specified in the jobscript with

#PBS -l nodes=2:ppn=32 #PBS -l walltime=10:00:00

in which case the job would be submitted with

qsub jobscript

- The default resource request if one isn't specified on the command line or in the jobscript is

nodes=1:ppn=1 -l walltime=5:00

meaning 1 core on 1 node, with a walltime of 5 minutes.

Initial directory

Torque by default will run your script from your home directory. You can specify a different initial directory with

qsub -d ...

For example, to start in your current directory:

qsub -d `pwd`

Large memory jobs

There are two queues that are available for large memory jobs.

- batch64 queue

- 2 nodes numbered n003 and n004

- Same design as batch queue

- 64GB of RAM per node

- available for general use

- batch128 queue

- 2 nodes numbered n001 and n002

- Same design as batch queue

- 128GB of RAM per node

- available for general use

As an example, to submit your jobs to the batch128 queue:

qsub -q batch128 ...

Jobs on the nodes are limited to 72 hours.

Swap has been disabled on these nodes.

Short jobs

Weekdays from 7am CDT to 7pm CDT, two nodes are set aside for jobs on Phoenix with walltimes of 2 hours or less. Maui will automatically schedule short jobs to these nodes. No policy is currently in place for Zephyr.

Usage policies

Maximum walltime

Jobs which exceed these walltime limits will be rejected by Torque.

On Zephyr: batch queue: 168 hours batch64 queue: 72 hours batch128 queue: 72 hours

On Phoenix: quadcore queue: 168 hours thirtytwogb queue: 72 hours

Quotas

Job which exceed the following quotas will be blocked by Maui. Blocked jobs can be seen with the command

showq -b

Node quotas

The batch64GB and batch128GB queues on Zephyr and the thirtytwogb queue on Phoenix have a limit of 1 job per user. This can also be overridden for a particular job by making the job preemptible using the Maui QOS feature.

Queue quotas

The batch64GB and batch128GB queues on Zephyr and the thirtytwogb queue on Phoenix have a limit of 1 job per user. This can also be overridden for a particular job by making the job preemptible using the Maui QOS feature.

Quality of Service (QOS)

In order to override the cluster's usage quotas, you can submit your jobs with a preemptible QOS. The caveat is that a preemptible job may be killed at any time, regardless of its wallclock limit, if doing so would enable another user's ordinary, non-preemptible job to run (your own non-preemptible jobs will not preempt your preemptible jobs).

To make a job preemptible, you can submit it with a QOS (quality of service) flag. For example:

qsub -W x=QOS:preemptible ... <jobscript>

You can make a submitted job (whether queued or running) preemptible with the Maui comand setqos:

setqos preemptible <jobid>

And you can make a job non-preemptible by setting its QOS to DEFAULT:

setqos DEFAULT <jobid>

You can check a job's QOS with the command checkjob.

Note that because of a bug in Torque, if you want to specify a node access policy and a QOS, you should not specify the QOS to Torque, but should instead submit the job without a QOS, then set the QOS using setqos.

How the scheduler determines which job to run next

Maui runs queued jobs in priority order (blocked jobs are not considered). Priority is a function of

- your recent cluster usage (the less usage, the higher the priority)

- the number of nodes requested (the more nodes, the higher the priority)

- a combination of the walltime requested (the less time, the higher the priority) and how long the job has been waiting to run

This last factor is referred to as the "expansion factor" in the Maui documentation, and is equal to

1 + time since submission / wallclock time

which, all else being equal, is designed to cause jobs to wait on average only as long as their requested walltime.

Maui also uses "backfill" to schedule short and small jobs when doing so would not interfere with higher priority jobs.

To find out how the priority of your job was calculated, use the Maui command diagnose, which will show something like this:

$ diagnose -p

diagnosing job priority information (partition: ALL)

Job PRIORITY* FS( User) Serv(XFctr) Res( Proc)

Weights -------- 1( 1) 1( 1) 1( 1)

46 113 6.9( -9.0) 1.2( 1.6) 91.9(120.0)

47 77 9.5( -9.0) 1.6( 1.5) 88.9( 84.0)

Percent Contribution -------- 8.0( 8.0) 1.4( 1.4) 90.6( 90.6)

FS refers to the fairshare factor. For both jobs, the user's usage is above the target usage, making the fairshare factor negative.

The exact weight given to each factor is subject to change as we attempt to find a balance between high utilization and fairness to all users.

The diagnose command will also tell you how much you and other users have been using the cluster over the past week.

$ diagnose -f FairShare Information Depth: 7 intervals Interval Length: 1:00:00:00 Decay Rate: 0.75 FS Policy: UTILIZEDPS System FS Settings: Target Usage: 0.00 Flags: 0 FSInterval % Target 0 1 2 3 4 5 6 FSWeight ------- ------- 1.0000 0.7500 0.5625 0.4219 0.3164 0.2373 0.1780 TotalUsage 100.00 ------- 23779.0 4358.0 6114.8 10451.7 10853.7 6915.1 7861.7 USER ------------- bvannemreddy 0.00 5.00 ------- ------- ------- ------- ------- ------- ------- ac.smiyawak* 16.74 5.00 18.66 32.48 0.75 16.23 0.71 17.28 27.11 bernard 0.09 5.00 ------- ------- ------- 0.80 0.00 0.02 0.00 ac.abarsan 2.17 5.00 2.08 ------- ------- ------- ------- 17.27 8.36

The asterisk indicates that the user is above their fairshare target, and that their jobs will be given a negative fairshare weight.

More qsub options

Receiving mail from Torque

You can have Torque automatically email you when a job begins, ends, or aborts.

qsub -m <mail-options>

The <mail-options> argument consists of either the single character 'n', or one or more of 'a', 'b', 'e'.

n No mail will be sent. a Mail will be sent when the job aborts. b Mail will be sent when the job begins. e Mail will be sent when the job ends.

Redirecting job output

Torque will write the standard output of your jobscript to <jobname>.o<jobnumber>, and the standard error to <jobname>.e<jobnumber>. To write them both to the standard output file, submit the job with

qsub -joe ...

or in your jobscript use

#PBS -joe

To write standard output to a different file, submit the job with

qsub -o <some-file>

or in your jobscript:

#PBS -o <some-file>

These can be combined; so, for example, submitting a job with

qsub -joe -o logfile

will cause both standard output and standard error to be written to the file 'logfile' in the directory in which the job is running.

You can see the output file using the command qpeek:

qpeek <jobid>

qpeek has several useful options:

$ qpeek -?

qpeek: Peek into a job's output spool files

Usage: qpeek [options] JOBID

Options:

-c Show all of the output file ("cat", default)

-h Show only the beginning of the output file ("head")

-t Show only the end of the output file ("tail")

-f Show only the end of the file and keep listening ("tail -f")

-# Show only # lines of output

-e Show the stderr file of the job

-o Show the stdout file of the job (default)

-? Display this help message

Using fewer than 8 cores per node on Phoenix and fewer than 32 cores per node on Zephyr

For Phoenix

- To request and use fewer than 8 cores per node, specify the number of cores you need with the ppn node property:

qsub -q quadcore -l nodes=<number of nodes>:ppn=<cores per node> ...

For example, to use 4 cores on each of 8 nodes, do

qsub -q quadcore -l nodes=8:ppn=4 ...

- By default, the scheduler will assign multiple jobs to a node as long as the node has resources available, and as long as the jobs are owned by the same user. This behavior can be prevented like this:

qsub -W x=NACCESSPOLICY:SINGLEJOB ...

so the previous command would be

qsub -q quadcore -l nodes=8:ppn=4 -W x=NACCESSPOLICY:SINGLEJOB ...

(It would probably make more sense to have SINGLEJOB as the default, but Maui for some reason does not allow that policy to be overridden.)

For Zephyr

- To request and use fewer than 32 cores per node, specify the number of cores you need with the ppn node property:

qsub -q batch -l nodes=<number of nodes>:ppn=<cores per node> ...

For example, to use 4 cores on each of 8 nodes, do

qsub -q batch -l nodes=8:ppn=4 ...

- By default, the scheduler will assign multiple jobs to a node as long as the node has resources available, and as long as the jobs are owned by the same user. This behavior can be prevented like this:

qsub -W x=NACCESSPOLICY:SINGLEJOB ...

so the previous command would be

qsub -q batch -l nodes=8:ppn=4 -W x=NACCESSPOLICY:SINGLEJOB ...

(It would probably make more sense to have SINGLEJOB as the default, but Maui for some reason does not allow that policy to be overridden.)

Forcing jobs to run sequentially

- To have a job start only after another job has finished, use the depend job attribute:

qsub -W depend=afterany:<some job-id> ...

For example,

qsub -W depend=afterany:1198 ...

Many other dependency relationships are possible; please see the qsub man page.

Passing variables to a jobscript

By default, no environment variables are directly exported from the submission environment to the execution environment in which a job runs. This means that you'll usually need to load any modules the job requires in your job script, or in your shell configuration files, as described above, or use one of the following techniques to pass variables to a job.

- To pass all environment variables:

export name=abc; export model=neon qsub -V ...

- To pass only specified variables:

qsub -v name=abc,model=neon ...

or

export name=abc; export model=neon qsub -v name,model ...

- You can also specify in a job script which variables Torque should import into the execution environment from the submission environment. To import all environment variables:

#PBS -V

or to import only specified variables:

#PBS -v name,model

Note that you cannot assign values to the variables this way.

Job and cluster info

- To see a list of jobs which are running, queued, and blocked, use the Maui command showq:

showq [-r | -i | -b ]

For example,

$ showq -r

JobName S Par Effic XFactor Q User Group MHost Procs Remaining StartTime

67174 R DEF 100.00 0.0 pr joe joe n101-ib 1 00:23:32 Wed Apr 28 14:05:12

67233+ R DEF 182.60 1.1 pr jan jan n082-ib 8 14:53:30 Thu Apr 29 13:37:49

66942- R DEF 49.71 0.3 pr lin lin n056-ib 64 18:59:14 Tue Apr 27 08:41:35

The "+" indicates that the job was backfilled; the "—" that the job is preemptible.

showq lists queued (Idle) jobs in descending priority order. showq may show a job as running for up to a minute before it actually starts. To see if it's actually running in that first minute, use Torque's qstat:

qstat -a

- To see how many cores are currently free for a job of a given walltime in each queue, use qbf:

qbf -d <walltime>

- To get more detailed statistics about current cluster usage, use our command qsum:

qsum

- Use pbstop for a graphical display of cluster usage:

pbstop [-?]

- pestat will give node-by-node cluster information:

pestat [-?]

- To get a lot of info from Torque about a running or queued job:

qstat -f <job-id>

- To diagnose problems with a job, you'll need to use both the Torque command tracejob and the Maui command checkjob, and possibly the Maui command diagnose:

tracejob <job-id> checkjob [-v] <job-id> diagnose -j <job-id>

- If you want to find out when Maui thinks your job will run, use showstart:

showstart <job-id>

$ showstart 56 job 56 requires 32 procs for 1:40:00 Earliest start in 1:32:48 on Wed Nov 25 18:02:37 Earliest completion in 3:12:48 on Wed Nov 25 19:42:37

- If you want to find out how small and how short you need to make a job for it to run immediately in a particular queue, use the Maui command showbf:

showbf [-f <node-feature>] [-n nodecount] [-d walltime]

where <node-feature> is one of quadcore, eightgb, or thirtytwogb. ("bf" is for backfill, the algorithm used to schedule short and small jobs without interfering with higher priority jobs.) Maui unfortunately doesn't take into account all of our scheduling policies, so showbf's core counts may be too high; nor does it know about our queue walltime limits.

- We have our own utility, qbf, which summarizes the output of showbf for each queue:

$ qbf Queue Cores Time quadcore 112 5:49:09 quadcore 40 3:00:00:00 thirtytwogb 8 1:00:00:00

This means that a 40 core job of any duration will run immediately, but a job requesting more than 40 cores will run immediately only if it requests less than 5 hours, 49 minutes. qbf is, however, subject to the same caveat as showbf.

- To get a list of the nodes on which jobs are running (use -s to sort the list):

jobnodes [-s] <jobid> ...

$ jobnodes -s 45124 45873 n008,n015

- To get a list of the nodes on which your jobs are running (use -s to sort the list):

mynodes [-s]

$ mynodes -s n010,n097

- To see if your application is still running on your nodes:

$ pdsh -w $(mynodes) ps

n046: PID TTY TIME CMD n046: 13056 ? 00:00:00 bash n046: 13717 ? 00:00:00 bash n046: 13721 ? 00:00:00 sshd n046: 13722 ? 00:00:00 ps

For all of these commands, only the numerical portion of the job-id needs to be specified.

qsub-stat

qsub-stat is available only on Phoenix and requires the ls-dyna/base module to be loaded.

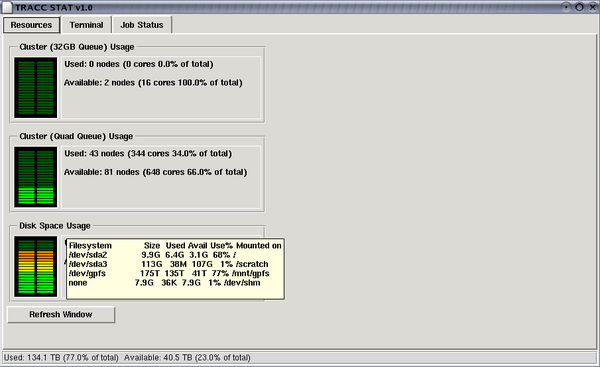

Most of the commands for tracking your jobs and checking cluster status can be accessed via graphical application qsub-stat. Please refer to section on NoMachine to learn how to run graphical sessions on the TRACC Cluster. qsub-stat has three tabs. The first one shows percentage usage of the two queues (8GB and 32GB) and the disk space usage. Once you move mouse cursor on the meter you will get more detailed information on usage of the queues and the disk space.

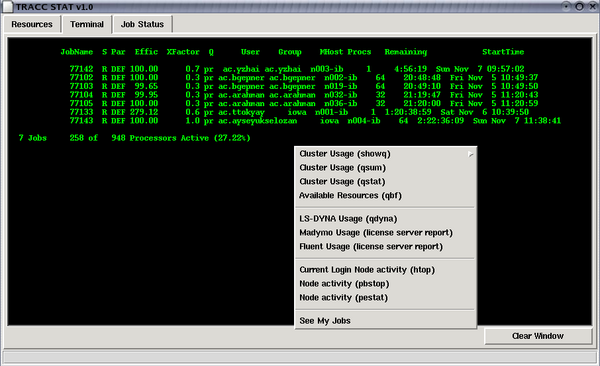

In the Terminal tab majority of explained previously statistics commands can be called through mouse menu. Press and hold right or left mouse button to have access to the commands' list.

The available commands are:

------------------------------------------- - Cluster Usage (showq): - showq - showq - r (running) - showq - b (blocked) - Cluster Usage (qsum) - Cluster Usage (qstat) - Available Resources (qbf) ------------------------------------------- - LS-DYNA Usage (qdyna) - Madymo Usage (license server report) - Fluent Usage (license server report) ------------------------------------------- - Current Login Node activity (htop) - Node activity (pbstop) - Node activity (pestat) ------------------------------------------- - See My Jobs -------------------------------------------

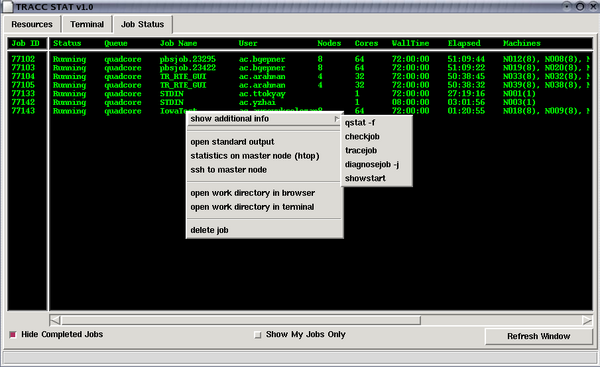

Current usage of the cluster can also be viewed in the Job Status tab. When you press and hold right or left mouse button on a job, you will see a menu of commands that you can apply to it (Some of them will work only on your own jobs). The available commands are:

------------------------------------------- - Show additional info: - qstat -f - chcekjob - tracejob - diagnosejob -j - showstart ------------------------------------------- - open standard output - statistics on master node (htop) - ssh to master node ------------------------------------------- - open work directory in browser - open work directory in terminal ------------------------------------------- - delete job (qdel) -------------------------------------------

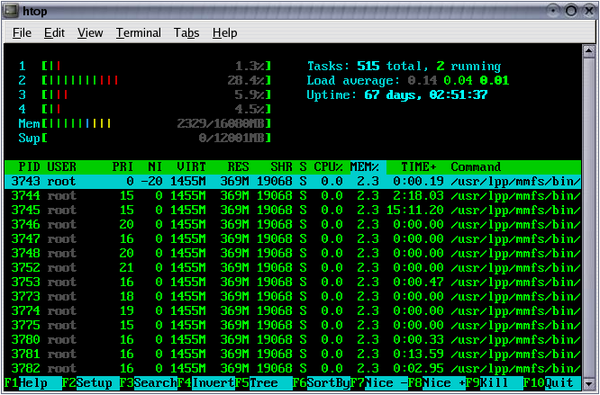

Htop command shows live statistics on the master node of the current job.

Deleting a job

To delete a job, use Torque's qdel or Maui's canceljob, which simply calls qdel itself:

qdel <job-id> canceljob <job-id>

Torque will delete the job immmediately with either command, but Maui may not know about the deletion for up to a minute, during which time it will continue to show up in the output of showq.

If either Torque or Maui tells you it can't delete the job, please send us an email and we'll delete it for you.

Only the numerical portion of the job-id needs to be specified.

Interactive Jobs

You can get interactive access to a compute node with Torque by using the -I switch to qsub:

$ qsub -I ... qsub: waiting for job nnnn.host1 to start qsub: job nnnn.host1 ready $ cd $PBS_O_WORKDIR

If you want to run a GUI application on the compute node, use the -X switch:

qsub -I -X ...

For this to work, you will need to either use the NX client to connect to the cluster, or enable trusted X11 forwarding when you ssh to the cluster. Windows SSH clients will allow you to enable forwarding in the preferences for the connection. Under Linux and MacOS, you would do this:

$ ssh -Y login.tracc.anl.gov

Also see below for an alternative means of directly accessing computes nodes.

Using GUI applications on a compute node

If you want to use a GUI application on a compute node, you will need to enable trusted X11 forwarding when you ssh to the cluster, and again when you ssh to the compute node (as described above). Windows SSH clients will allow you to enable forwarding in the preferences for the connection. Under Linux and MacOS, you would do this:

$ ssh -Y login.tracc.anl.gov

After logging in to a login node, you would then do this to access the compute node:

$ ssh -Y n001

If you're using the NX client to connect to the cluster, you won't need to ssh to a login node, but you will still need to ssh to a compute node.

Accessing a compute node directly

The easiest way to do this is by having Torque give you interactive access to a node. But if you want to start a process running on a compute node and have it continue to run after you log out, use the method described here.

- You can access any compute node which is running one of your jobs with the command

ssh <compute-node>

You can use the command

qstat -n1 <job-id>

to find out which nodes are running <job-id>.

- If you want Torque to assign you nodes, but don't need it to run a job on your behalf, you can do (for example)

echo sleep 24h | qsub -l select=1,walltime=24:00:00

and then ssh to the node.

Using licensed software

For some of our software we have a limited number of licenses. To ensure that there will be sufficient licenses available when Maui schedules your job, you will need to submit your job under an application-specific account. Any application wrapper scripts we have will take care of this for you, but if you need to specify the account manually, you would do it like this:

- LS-DYNA:

qsub -A dyna ...

Debugging a job

Some things to keep in mind or to try when your job doesn't run as expected:

- Jobs don't inherit any environment variables from the submission environment. This means that if your job requires that PATH, LD_LIBRARY_PATH, MPI_HOME, or any other variable be set, you'll need to set it either in the job script, either directly or with a module, or in your shell configuration files.

- Try running your job interactively on a compute node, like this:

$ qsub -I ... qsub: waiting for job nnnn.host1 to start qsub: job nnnn.host1 ready $ cd $PBS_O_WORKDIR

Now run your job script or start up a debugger with your program, just as you would on a login node.

- Try some simple jobs, and look at the standard output and standard error files (STDIN.o<job-id> and STDIN.e<job-id>):

echo | qsub echo env | qsub

- If you want to see the output of your job as it's being produced, use qpeek:

qpeek <jobid>

Undeliverable job output

If Torque cannot copy the files containing the output of your job to the job's working directory, the files will eventually be copied to /mnt/gpfs/undelivered. They will be deleted after 10 days.

Requeuing of jobs

If your job dies due to a problem with the cluster, it will not automatically be requeued. If you would like it to be, do

qsub -r y ...

Reserving the cluster

Users cannot directly reserve the cluster, but if you think you might have a need for a reservation, please contact us. Click to send email as far in advance as possible.